Abstract

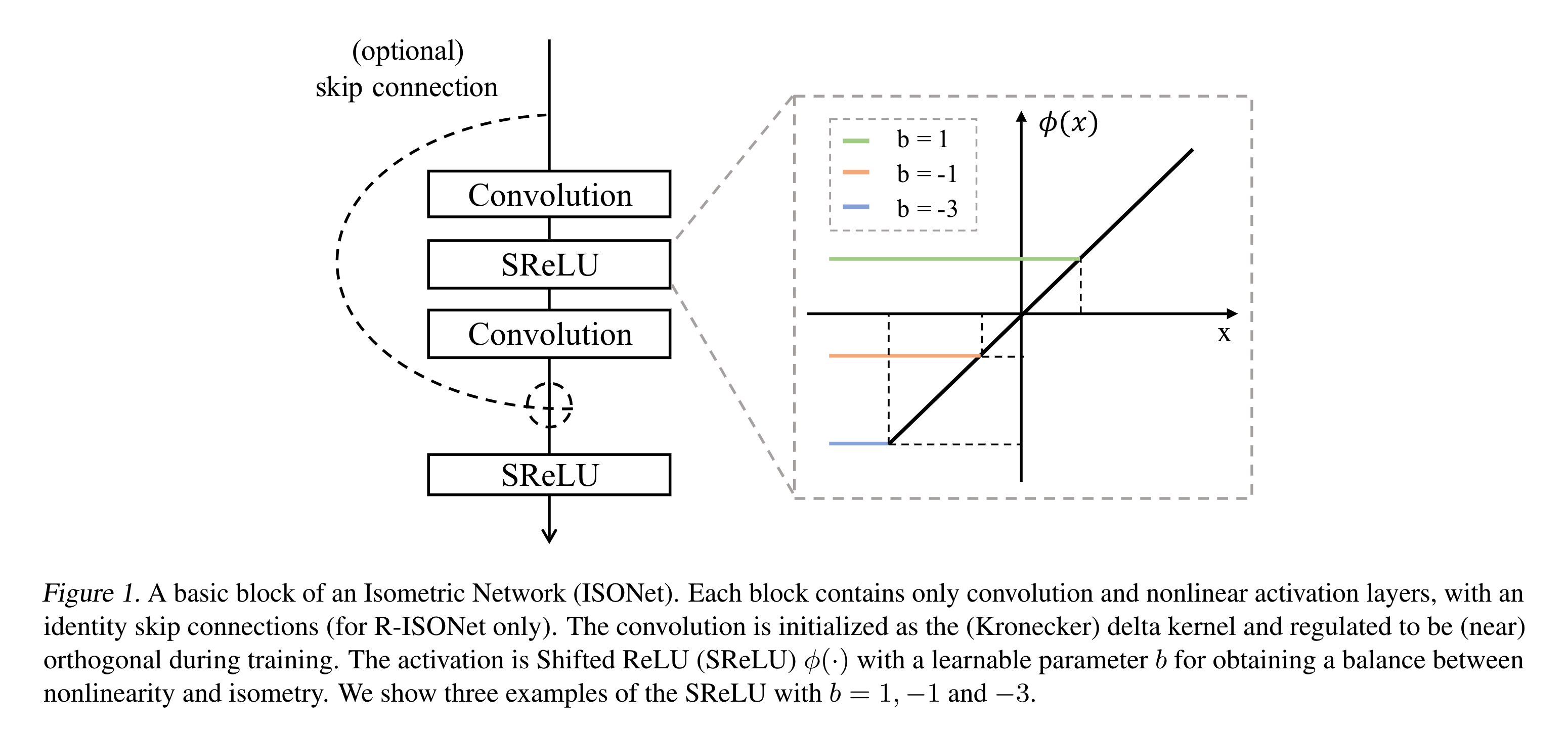

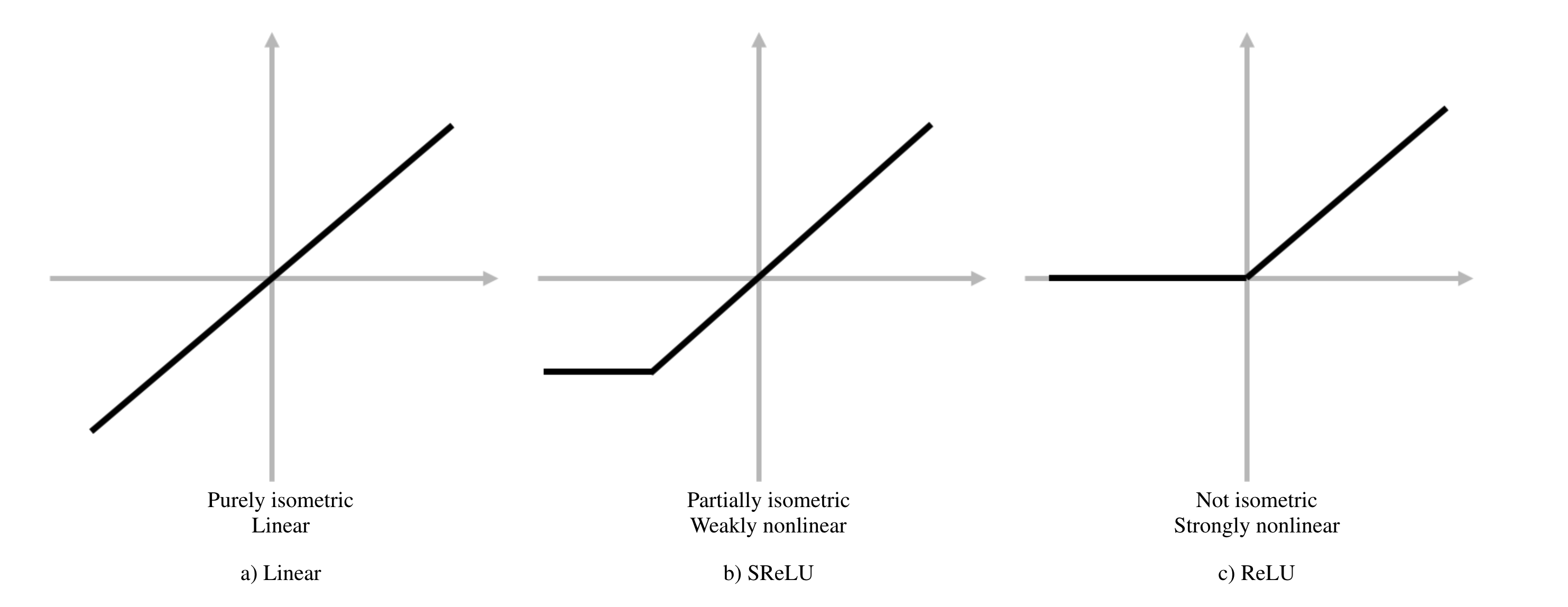

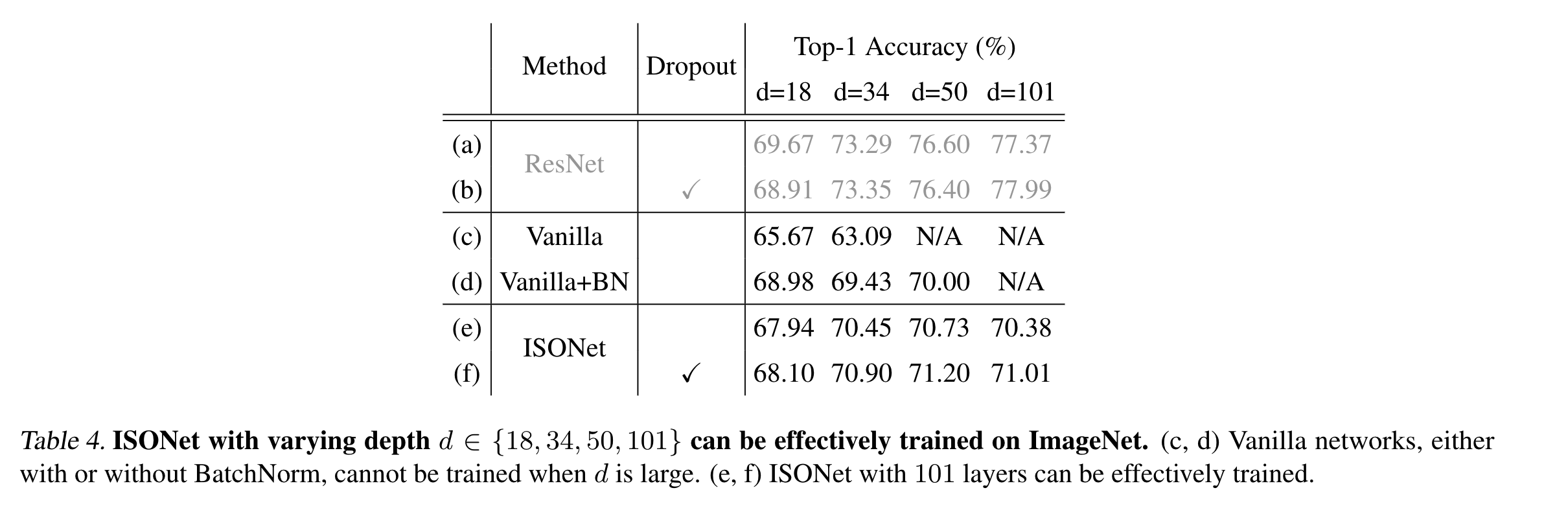

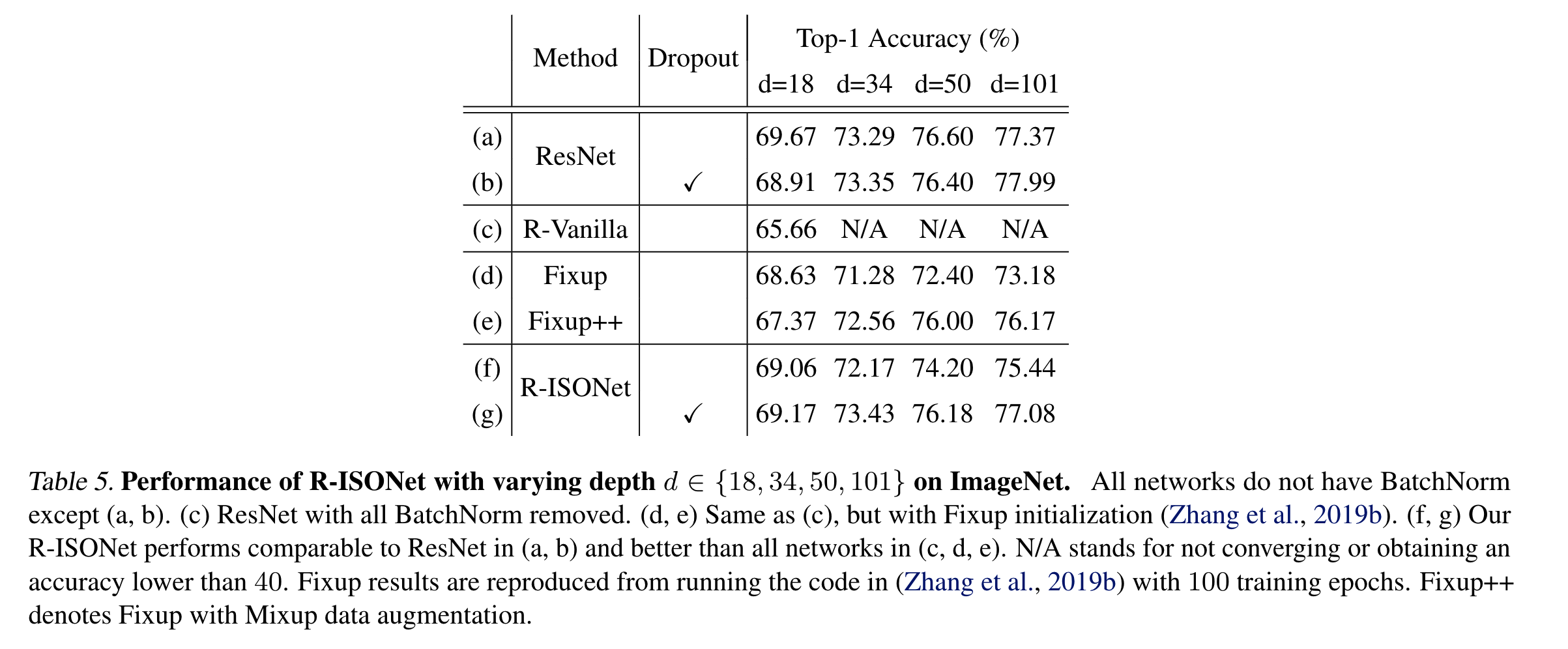

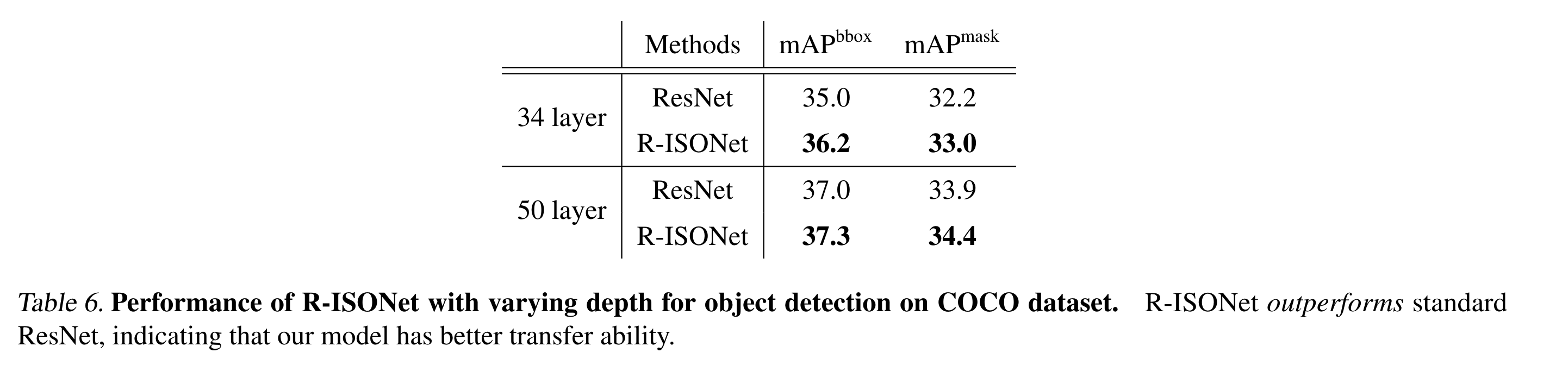

Initialization, normalization, and skip connections are believed to be three indispensable techniques for training very deep convolutional neural networks and obtaining state-of-the-art performance. This paper shows that deep vanilla ConvNets without normalization nor skip connections can also be trained to achieve surprisingly good performance on standard image recognition benchmarks. This is achieved by enforcing the convolution kernels to be near isometric during initialization and training, as well as by using a variant of ReLU that is shifted towards being isometric. Further experiments show that if combined with skip connections, such near isometric networks can achieve performances on par with (for ImageNet) and better than (for COCO) the standard ResNet, even without normalization at all.

Video

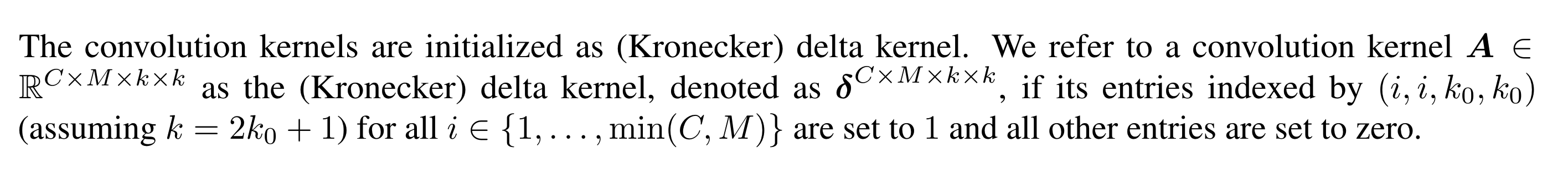

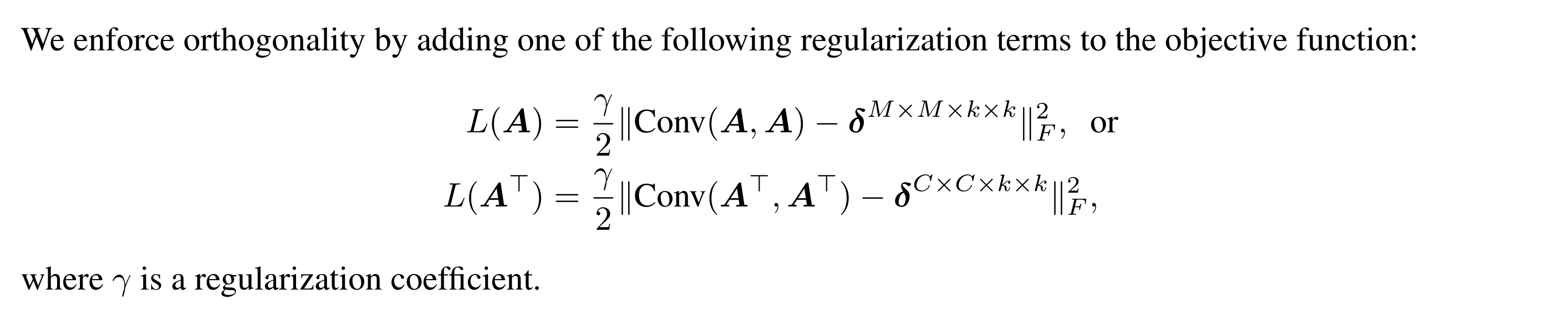

Method

Results

Paper

Bibtex

@InProceedings{qi2020deep,

author={Qi, Haozhi and You, Chong and Wang, Xiaolong and Ma, Yi and Malik, Jitendra},

title={Deep Isometric Learning for Visual Recognition},

booktitle={ICML},

year={2020}

}

Acknowledgements:

The authors acknowledge support from Tsinghua-Berkeley Shenzhen Institute Research Fund. Haozhi is supported in part by DARPA Machine Common Sense. Xiaolong is supported in part by DARPA Learning with Less Labels. We thank Yaodong Yu and Yichao Zhou for insightful discussions on orthogonal convolution. We would also like to thank the members of BAIR for fruitful discussions and comments.